Twitter is deploying new features on Thursday that it says will keep pace with disinformation and influence operations targeting the 2020 election.

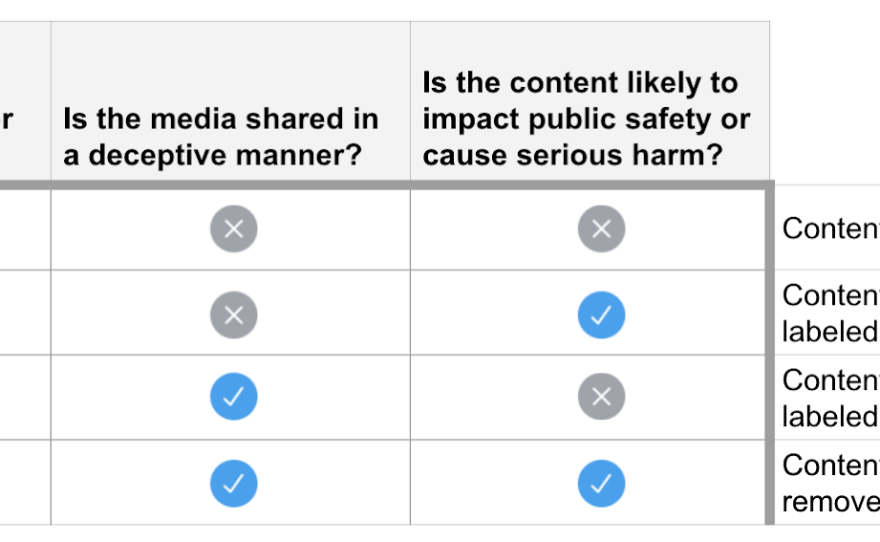

A new policy on "synthetic and manipulated media," attempts to flag and provide greater context for content that the platform believes to have been "significantly and deceptively altered or fabricated."

Starting Thursday, when users scroll through posts, they may begin seeing Twitter's new labeling system — a blue exclamation point and the words "manipulated media" underneath a video, photo or other media that the platform believes to have been tampered with or deceptively shared.

This could include deepfakes — high tech videos that depict events that never happened — or "cheepfakes" made with low-tech editing, like speeding up a video or slowing it down.

Twitter's head of site integrity, Yoel Roth, told NPR that moderators will be watching for two things:

"We're looking for evidence that the video or image or audio have been significantly altered in a way that changes their meaning," Roth said. "In the event that we find evidence that the media was significantly modified, the next question we ask ourselves is, is it being shared on Twitter in a way that is deceptive or misleading?"

If the media has been modified to the extent that it could "impact public safety or cause serious harm," then Twitter says it will remove the content entirely.

Twitter says this could include threats to the physical safety of a person or a group or an individual's ability to express their human rights such as participating in elections.

When users tap on newly labelled posts, they'll provide "expert context" explaining why the content isn't trustworthy.

If someone tries to retweet or "like" the content, they'll receive a message asking if they really want to amplify an item that is likely to mislead others.

Twitter says they may also reduce the visibility of the misleading content.

We know that some Tweets include manipulated photos or videos that can cause people harm. Today we’re introducing a new rule and a label that will address this and give people more context around these Tweets pic.twitter.com/P1ThCsirZ4

— Safety (@Safety) February 4, 2020

Evolution of interference

Twitter was caught "flat-footed" by the active measures that targeted the U.S. election in 2016, Roth said. During that year, influence specialists used fake accounts across social media to spread disinformation and amplify discord.

That work never really stopped, national security officials have said, and officials warned ahead of Super Tuesday's primaries that it continues at a comparatively low but steady state.

Roth told NPR that while Twitter has not traced specific tweets about the 2020 campaign back to Russia, it is trying to apply what it learned from the last presidential race, including about the use of fake personae.

"These would be accounts that were pretending to be Americans to try and influence certain parts of the conversation," Roth said in an interview with NPR's Ari Shapiro.

While this tactic has remained a part of Russia's toolkit, the playbook also has continued to expand.

In 2018, Russia didn't just interfere in the election, influence-mongers tried to make it look like there was more interference than there actually was.

"We saw activity that we believe to have been connected with the Russian Internet Research Agency that was specifically targeting journalists in an attempt to convince them that there had been large scale activity on the platform that didn't actually happen," Roth said.

This time around, rather than create its own messaging, Russia and other foreign actors are amplifying the voices of real Americans, Roth said. By re-sharing extreme — albeit authentic content — they're able to manipulate the platform without introducing any additional misinformation.

"I think in 2020, we're facing a particularly divisive political moment here in the United States and attempts to capitalize on those divisions among Americans seem to be where malicious actors are heading," Roth said.

The social network has studied fake accounts and coordinated manipulation efforts and have put its new policies to the test during elections in the European Union and India.

Roth says Twitter has also built a community of experts, including community moderators and partners in government and academia. Another priority is transparency.

When the platform uncovers "state-backed information operations" it shares the information publicly.

Weaponizing deception

Foreign actors aren't the only ones likely to be impacted by this new policy. Misinformation can also come from American political campaigns trying to use social media to their advantage.

Roth says he's already seen American 2020 candidates employ questionable tactics to get voters' attention.

"We've seen ... accounts that are pretending to be compromised. So they'll sort of pretend that they were hacked and then share content that they might otherwise not have," Roth said, along with "large scale attempts to mobilize volunteers to share content on the service."

While neither of these actions necessarily violate Twitter's policies, there are moments where they can cross the line.

For example, former New York City Mayor Michael Bloomberg's presidential campaign hired hundreds of temporary employees to post campaign messages on Facebook, Twitter and Instagram.

Twitter decided to suspend dozens of these pro-Bloomberg accounts. Roth says their decision was not based on the fact that these individuals were being paid to post content.

"Our focus whenever we're enforcing our policies is to look at the behavior that the accounts are engaged in, not who we think is behind them or what their motivations were, because in most instances we don't know," Roth said.

Twitter determined that the suspended accounts were "engaged in spam."

If a large number of people organically decide to post the same message or similar messages at the same time, that isn't likely to qualify as spam, Roth said.

But if individual users create multiple accounts just for the purpose of tweeting a specific message, then it does. They're over amplifying their voice in a way that constitutes manipulation.

According to an NPR/PBS NewsHour/Marist poll, 82 percent of Americans think they will read misleading information on social media this election. Three quarters said they don't trust tech companies to prevent their platforms from being misused for election interference.

Roth said the investments Twitter has made since 2018 to protect the "integrity of the platform" and ensure the security of its users has been significant — but he called that a balancing act.

This is why Twitter's new policy doesn't remove all manipulated content. To combat false information, Roth says Twitter is trying to highlight information from trustworthy sources.

For example, while Twitter isn't removing all false information regarding the coronavirus, it's making sure that information from the Centers for Disease Control and Prevention is displayed prominently.

"I think a platform has a responsibility to create a space where credible voices can reach audiences that are interested in understanding what they have to say," Roth said.

"That doesn't mean that we don't allow discussion of these issues throughout the product, but we want to ensure that when you first come to Twitter at the information that you see in our product is going to come from authoritative sources."

Copyright 2023 NPR. To see more, visit https://www.npr.org. 9(MDAzMjM2NDYzMDEyMzc1Njk5NjAxNzY3OQ001))